Enterprise AI Fine-Tuning Solutions

Custom LLM Fine-Tuning

Transform your open-source AI models into business-ready, domain-specific experts. Our fine-tuning solutions adapt large language models to your proprietary data—legal, financial, healthcare, technical, or any specialized content—delivering accuracy, reliability, and actionable intelligence.

What we Offer

Business Value We Bring

Custom Domain Adaptation

Train models on your unique datasets for precise, context-aware responses.

Parameter-Efficient Fine-Tuning

Achieve enterprise-grade results quickly and cost-effectively, even on standard hardware.

Scalable Multi-Domain Support

Fine-tune across multiple industries or business units with consistent performance.

Autonomous AI Agents

Build AI that can reason, interact with tools, and execute multi-step workflows using your data.

Hybrid Knowledge Integration

Combine fine-tuned models with dynamic retrieval to minimize errors and improve accuracy.

Continuous Evaluation & Optimization

Benchmark, monitor, and refine AI behavior over time for sustained performance.

Rapid Deployment & Integration

Seamless connection to your applications, chatbots, research tools, or enterprise systems.

Advanced Training & Adaptation Techniques

Leverage cutting-edge approaches to maximize model efficiency, reasoning ability, and real-world usability.

Why Choose Our SLM Fine-Tuning

Resource-Efficient

Train on single GPUs using quantization and low-rank adapters; 0.01-0.5% parameters tuned for GLUE/MMLU gains matching full fine-tuning.

Tailored Performance

Boost reasoning, code review, or enterprise tasks on models like Granite 7B or Gemma 2—up to 40% better accuracy.

Proven Methods

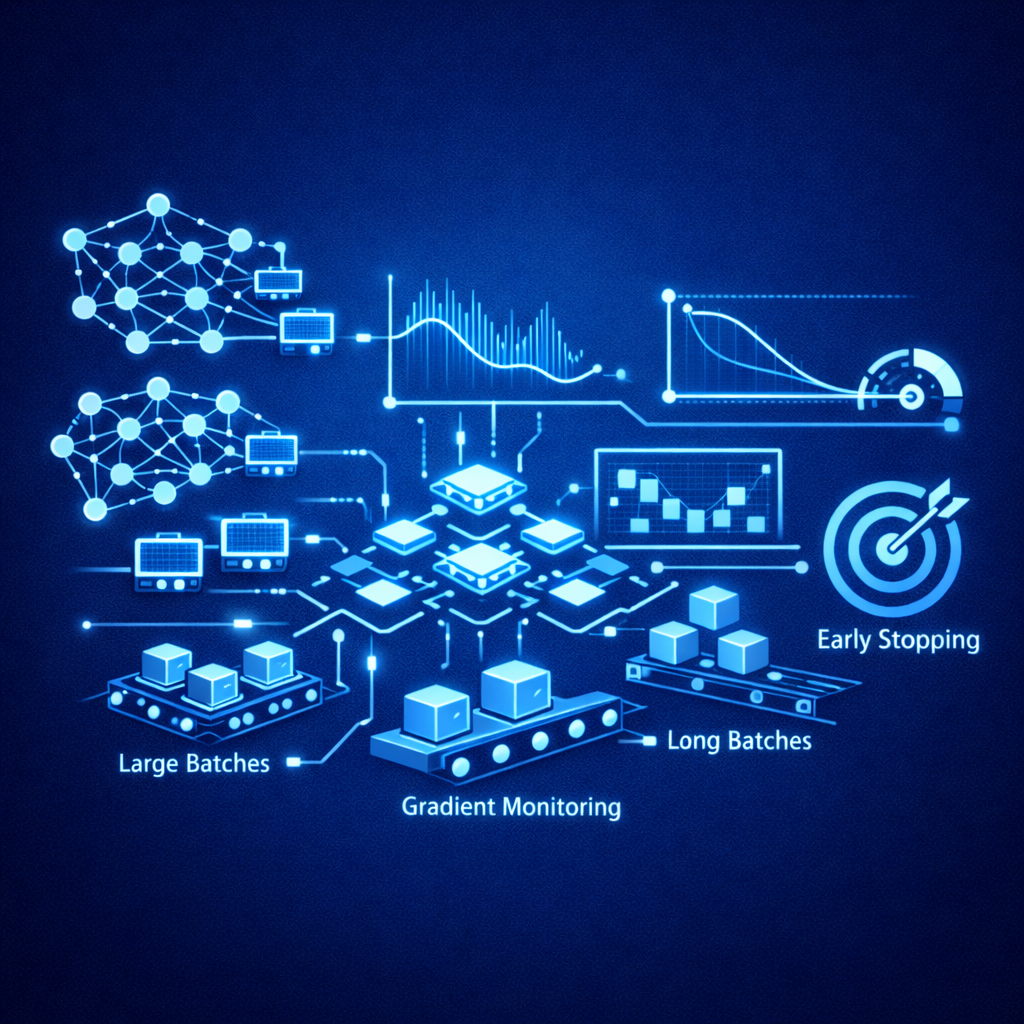

LoRA variants, adapters, and optimal schedules (large batches, low LR) for fast convergence and early-stopping via gradient monitoring.

State-of-the-Art SLM Fine-Tuning

Elevate your AI with our expert fine-tuning for Small Large Language Models (SLMs). We apply cutting-edge PEFT methods like QLoRA, DoRA, and PiSSA to customize 1-7B models for your domain—delivering top performance with minimal resources.

Our Fine-Tuning Process & Pipeline

Requirement Analysis

Understand your business objectives, target tasks, and data availability.

Data Preparation

Curate and preprocess domain-specific datasets, including labeled examples tailored for your use case.

Model Selection

Choose the optimal foundation model and SLM variant based on performance and resource constraints.

Fine-Tuning Techniques

Employ advanced methods such as Low-Rank Adaptation (LoRA), parameter-efficient tuning, and layer freezing to maximize efficiency and mitigate risks like catastrophic forgetting.

Iterative Training & Validation

Use feedback-driven cycles and curriculum learning to progressively enhance model accuracy and robustness.

Deployment & Monitoring

Deliver the tuned model integrated into your workflow with ongoing performance monitoring and updates.

What Values Do We Bring to You?

Accelerate decision-making with AI that understands your context.

Reduce errors and hallucinations through hybrid fine-tuning and retrieval strategies.

Scale AI capabilities across teams and functions without heavy infrastructure costs.

Ensure your AI evolves with your business, continuously learning from new data.

Key Techniques We Use

|

Technique

|

Benefits

|

Use Case

|

|---|---|---|

|

QLoRA

|

4-bit quant, GPU-friendly

|

Low-compute domains

|

|

DoRA

|

Magnitude/direction split

|

Reasoning tasks

|

|

PiSSA

|

Faster init/convergence

|

GLUE benchmarks

|

|

Adapters

|

Modular, mergeable

|

Multi-task setups

|

Optional Enhancements to Boost Appeal

Showcase case studies of fine-tuning for niche applications (e.g., legal document analysis, technical troubleshooting).

Highlight cost savings and latency improvements compared to large model alternatives.

Offer customizable service tiers based on dataset size, model complexity, and deployment preferences.

Provide demo or trial access to illustrate model improvements on client-specific data.

Emphasize security compliance certifications and data governance best practices.

Business Impact

Deploy production-ready SLMs that outperform larger closed models on your data—ideal for edge devices, cost-sensitive apps, or agentic systems alongside GraphRAG. End-to-end: data prep, tuning, evaluation, and Kubernetes deployment.

Ready to Optimize? Contact us for a free SLM assessment and pilot. Transform prototypes to enterprise AI today